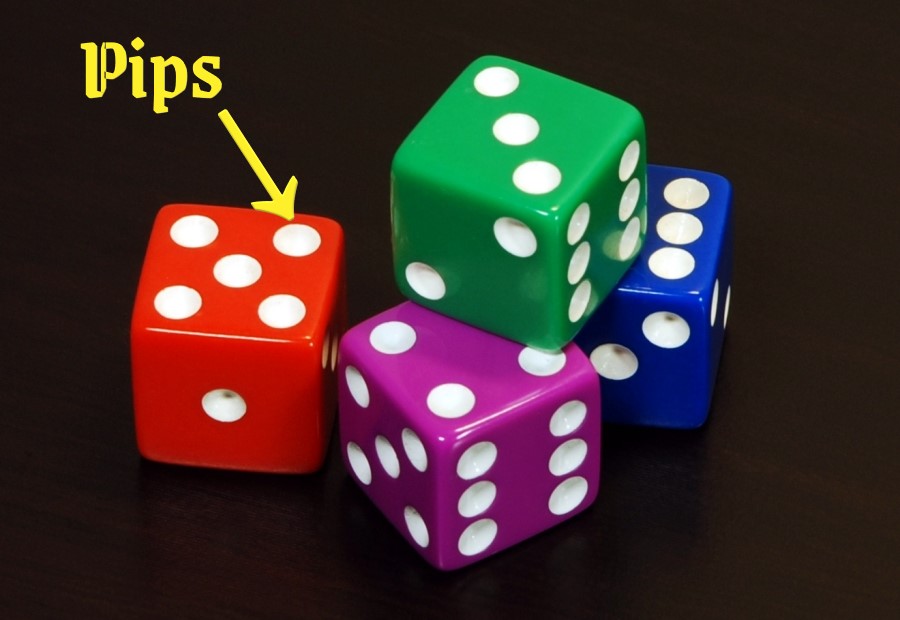

How to Win at Dice. Why then did no one invent probability earlier? It was because most people did not believe in randomness. Anything that happened was a result of God or the gods making it happen. In addition, the people of this time did not have accurate notation or symbols to calculate such things. There is also the idea that because the dice were not

fair, no one noticed the likelihood of a number showing a certain amount of times.

Summa de Arithmetica. The problem is as follows:

Known as the Unfinished Game problem, the puzzle asked how the pot should be divided when a game of dice has to be abandoned before it has been completed. The challenge is to find a division that is fair according to how many rounds each player has won by that stage. (Devlin, 2010, p.580)

Pascal wrote,I was not able to tell you my entire thoughts regarding the problem of the points by the last post, and at the same time, I have a certain reluctance at doing it for fear lest this admirable harmony, which obtains between us and which is so dear to me should begin to flag, for I am afraid that we may have different opinions on this subject. I wish to lay my whole reasoning before you, and to have you do me the favor to set me straight if I am in error or to endorse me if I am correct. I ask you this in all faith and sincerity for I am not certain even that you will be on my side.(Devlin, 2010, p.580).

Both men solved the problem in different ways, and Fermat's way ended up being the more straightforward approach. They came to the conclusion that the person leading the game would get three-fourths of the pot and the person losing would receive one-fourth of the pot. This discovery changed the thought that mathematics could not be used to determine an outcome of a future event, to probability theory, risk management, actuarial science and even the insurance industry (Devlin, 2010).

Bernoulli came to the conclusion that an infinite amount of money would be needed to satisfy this problem. He is also credited with showing how calculus can be applied to probability.The problem arose from a game of chance in which one player tosses a coin and a second player agrees to pay a sum of money if heads comes up on the first toss, double the money if heads appears on the second toss, four times as much if on the third throw, eight times as much if on the fourth toss, and so on. The paradox arose concerning how much should be paid in before the game to make it fair to both players.(Lightner, 1991, p.628)

Following in the footsteps of his countrymen was Pierre-Simon Laplace. He was a French astronomer and mathematician and dubbed The father of

modern probability

. Though he cared far more for the heavens than mathematics, he developed the theory of continuous probability.

He is credited with the formula that Cardano started; the probability of an event is equal to the number of successes or favorable outcomes

divided by the total number of possible outcomes. The central limit theorem can also be attributed to Laplace (Debnath & Basu, 2014).

Jakob Bernoulli, a Swiss mathematician, came along and discovered the law of large numbers. The law of large numbers uses Laplace's formula and states that if we calculate the proportion for a large number of outcomes, then the probability will be an accurate representation of the true, or theoretical, probability (Jardine, 2000). Testing Bernoulli's theory were three very bored men. The first was Count Buffon who tossed a coin 4040 times and got heads 2048 times while sitting in a German prison during World War II. The next gentleman was a South African mathematician named John Kerrich. He tossed a coin 10,000 times and heads appeared 5067 times. The final man, who probably needed some friends, was English statistician Karl Pearson. He tossed a coin 24,000 times and it landed on heads 12,012 times. They showed that the larger the number the outcomes, the closer it will be to the true probability. Bernoulli knew what he was talking about.